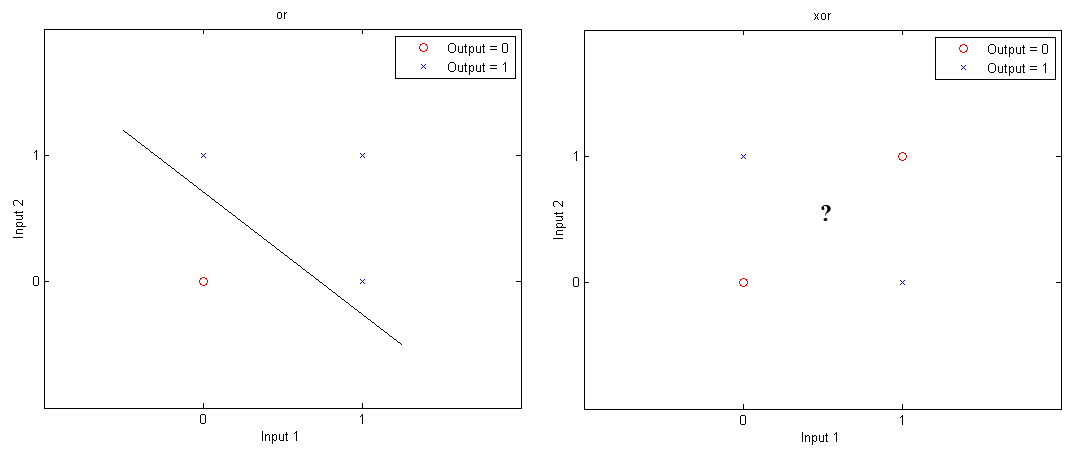

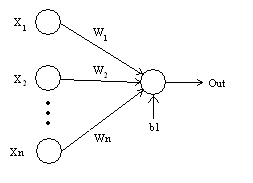

Previously, Matlab Geeks discussed a simple perceptron, which involves feed-forward learning based on two layers: inputs and outputs. Today we’re going to add a little more complexity by including a third layer, or a hidden layer into the network. A reason for doing so is based on the concept of linear separability. While logic gates like “OR”, “AND” or “NAND” can have 0’s and 1’s separated by a single line (or hyperplane in multiple dimensions), this linear separation is not possible for “XOR” (exclusive OR).

Neural Networks – A Multilayer Perceptron in Matlab

125