Hello again! Today I’m back with Lesson 2 of our ongoing five part series on ODE modeling. Previously, Lesson 1 introduced the use of ODEs as a method of modeling population dynamics and discussed a simple method of evaluating the equations. Today, we will look at Matlab’s implementation of the Runge-Kutta method for solving ODEs. Lesson 3 will explore techniques for designing more realistic models. Lesson 4 will discuss methods for matching these abstract models to empirical data. Finally, we will play around with some fun ‘chaotic’ systems in Lesson 5.

In the previous lesson, I showed how we could use a simple first order step method to evaluate an ODE. We saw that the method was crude, but could potentially produce passable results by decreasing the step size. Today, we are going to learn what ‘first order’ means, how higher order methods do better, and how to implement higher order solutions in Matlab.

In the simplest terms, the order of the solution refers to how fast the evaluation diverges from the true solution. A first order solution diverges on the order of its step size (which is usually referred to as h). A second order diverges on the order of its step size squared. Thus, assuming your step size is below 1, a higher ordered solution will be significantly more accurate than a lower one. The fact that higher order solutions are worse given a step size of more than 1 is known as Runge’s Phenomenon.

Now lets look at a possible second order solution method. Our first order method began at our initial point, calculated the slope at that point, and used the slope to make a linear interpolation forward over a give distance. The second order solution starts the same way, but then reevaluates the slope at the destination to double-check. Thus, when we calculate the first order value at x(t+h), we also calculate the slope at that point. Now we have two values for the slope, one at the beginning of the step and one at the end. Finally, we average these two values and start over at the beginning one last time to calculate our final value for the step. This second order method is known as Heun’s Method.

If that is confusing, don’t worry! It’s time to code it up so we can see it in action. In the last lesson, we played with the exponential function. We will do the same in this lesson. For this example I will use a step size of 0.5 and evaluate from 0 to 10. We will start with the first order solution for comparison:

% First order solution % Initialize the array, we will evaluate to t=10 using a step of 0.5 x1 = zeros(21,1); % The initial population is 1 x1(1) = 1; % For each step, the new population is equal to the % old population, plus the rate of change from before % (which is equal to the old population) for i = 2:21 x1(i) = x1(i-1) + .5 * x1(i-1); end

Simple! Now for the more complicated second order solution:

% Second order solution % Initialize the array, we will evaluate to t=10 using a step of 0.5 x2 = zeros(21,1); % The initial population is 1 x2(1) = 1; % Things are more complicated here. % We will go step by step. for i = 2:21 % First get the slope at the initial point % The ODE defines the slope to be the same as the function value slope1 = x2(i-1); % Use that slope to find the first order 'solution' first_order_sol = x2(i-1) + .5 * slope1; % Now we reevaluate the ODE to find the new slope at the % end of the step. Because the ODE defines the slope % to be equal to the value, this is very simple! slope2 = first_order_sol; % Finally, we average the two slopes to take % the real step forward x2(i) = x2(i-1) + .5 * (slope1 + slope2) / 2; end

Now, let’s compute the actual solution and plot all three for comparison. From the last lesson, we know that the real solution to the ODE is x(t) = e^t:

% Initialize the new array

sol = zeros(21,1);

% Evaluate the solution at each step.

% Remember that time 0 is stored in index 1.

% exp is Matlab's implementation of the exponential function.

for i = 1:21

sol(i) = exp(.5 * (i-1));

end

% Compare the two solutions

plot([0:.5:10], x1, [0:.5:10], x2, [0:.5:10], sol)

legend('x1', 'x2', 'sol', 2)

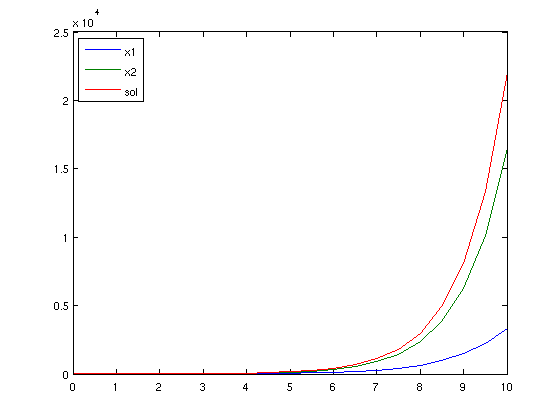

As you can see, the second order solution outperforms the first order one. In fact, using a step size of 0.5, the second order solution beats a first order solution using a step size of 0.1!

So, now what? Given this progress, why not just jump ahead and do a 100th order solution? To be honest, while first order solutions are extremely crude, most ODEs can be reasonably approximated by fourth order solutions. There is really no need to perform the extra computation to go beyond that. If you don’t want to take my word on this, great! We will be looking into sensitivity in Lesson Five when we take the time to play with some extremely sensitive equations.

Until then, let us assume that first order solutions are easy, second order solutions are better, and fourth order solutions are as accurate as we’ll ever need. So, should we go ahead and design a fourth order solution from scratch? Actually, no! Fortunately, there are a decent number of established fourth order methods. The first and the fastest is the standard fourth order Runge-Kutta method, otherwise known as RK4. This is a very simple and fast method, but it is not the most accurate. The main problem with RK4 is that it is similar to our previous examples in that it uses a fixed step size.

What does it mean to have a variable step size and why does it matter? It is important to realize that every implementation of a numerical solution is balancing speed and accuracy. To be more accurate, you have to perform more evaluations, which takes more time. Thus, the trick is to reduce the number of evaluations by only performing them where they matter the most. In the case of variable step size algorithms, an extra evaluation is made each step to calculate the expected error from that step. Then the step size of the algorithm is adjusted to account for this error. If the error is fairly low, the algorithm can be more confident and take longer steps. If the error is high, the algorithm knows that it must be more precise and will take smaller steps. This approach helps combine the best of both speed and accuracy. There are three well known fourth order methods that use variable step sizes: Fehlberg (RKF45), Cash-Karp (RKCK45), and Dormand-Prince (RKDP45). The bad news is that these variable step algorithms are much more difficult to implement. The good news is that Matlab has done the work for you! The ode45 function is Matlab’s implementation of the Dormand-Prince fourth order algorithm.

Now that we know the reasoning behind higher ordered solutions, why don’t we go ahead and implement one? Because Matlab has done most of the work for us, the actual implementation is fairly simple. All we need to do is to define our equation and then set some preferences. Matlab will take care of the rest!

First, let’s define the equation. To do so, we must create a new Matlab function that represents our ODE. Matlab requires functions to be saved in an ‘m’ file that shares the name of the function. Because our ODE represents the exponential function, we will start by creating the file ‘exponential.m’:

% exponential.m % % Implements the function dx/dt = x % % Inputs: % t - Time variable: not used here because our equation % is independent of time, or 'autonomous'. % x - Independent variable: this is our population % Output: % dx - First derivative: the rate of change of the % population, given the population function dx = exponential(t, x) % The exponential ODE function is simple! dx = x;

To use ode45, you must set up your equation this way. Specifically, you must write a function that takes two inputs: the time variable first and the independent variable second. The output must be the first time derivative of the independent variable. That means you must always solve your ODE for the time derivative before you can enter it into Matlab. As an aside: it is always a good idea to comment in the definitions of your input and output variables any time you write a new function.

Ok, we have our function definition! If we want, this is the time to set any evaluation preferences that we care about. We will do so by using the odeset function to save our preferences into a temporary variable. The details of this function are going to be outside the scope of this lesson. I may go into more detail in Lesson 5. Either way, I encourage you to look at its many options over at Mathworks and to play around with it on your own.

For this lesson, let’s try reducing our relative error tolerance for fun. Also, because we are supposedly implementing a population model, we should enforce that our population never drops below zero. I will save these results into a variable called ‘options’:

% Set our preferences for ode45

% The default relative tolerance is 1e-3.

% To set our output to non-negative, we provide an array listing

% each population that we want to constrain. Since this example

% has only a single population, we pass the array [1]

options = odeset('RelTol', 1e-4, 'NonNegative', [1]);

All that is left is to use the ode45 function to get our result. Along with our function definition and our options, we must also specify the interval of our solution (0 to 10) and the initial population (1). We will be given two arrays: one containing the solution and one containing its corresponding time points (because we are using a variable-length time step):

% Use ode45 to solve our ODE

% Place the time points in a vector 't'

% Place the solution in a vector 'x3'

[t,x3] = ode45('exponential', [0 10], 1, options);

That’s it! Now for one final comparison. I cheated and bumped up the resolution of the actual solution as well:

% I increased the solution resolution offline.

plot([0:.5:10], x1, [0:.5:10], x2, t, x3, [0:.1:10], sol)

legend('x1', 'x2', 'x3', 'sol', 2)

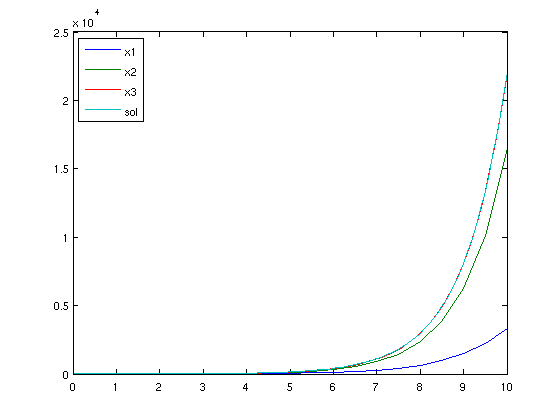

Our ode45 solution is so accurate that it is actually hard to see below the actual solution. To be fair, if you check you can see that the fourth order solution is using 68 time steps versus only 20 for the first and second order solutions. Regardless, I can assure you it vastly outperforms either of the other two.

So, that’s it for Lesson 2! Check back next time as we will be playing around with systems of multiple populations. Specifically, we will examine a Lotka-Volterra predator/prey relationship and a SIR epidemiological example. See you then!

Bit of a beginner here… attempting ode45 section of the tutorial. Copying and pasting text into MATLAB function called exponential.m and running in command line exponential 0.5 1 returns an error “??? Maximum recursion limit of 500 reached. Use set(0,’RecursionLimit’,N)

to change the limit. Be aware that exceeding your available stack space can

crash MATLAB and/or your computer.”

Many thanks

nice tutorial, i wish to get list program “model and simulation temperature dynamic system” I am very happy could send me program it. I Thank you very much

Nice tutorial. Maybe you should mention that, at least with explicit Runge-Kutta methods, it becomes (very) difficult to derive methods of order higher than 4 or 5 due to the enormous amount of order conditions that have to be satisfied by the coefficients.

Cheers!