Neural networks can be used to determine relationships and patterns between inputs and outputs. A simple single layer feed forward neural network which has a to ability to learn and differentiate data sets is known as a perceptron.

By iteratively “learning” the weights, it is possible for the perceptron to find a solution to linearly separable data (data that can be separated by a hyperplane). In this example, we will run a simple perceptron to determine the solution to a 2-input OR.

X1 or X2 can be defined as follows:

| X1 | X2 | Out |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 1 | 1 |

If you want to verify this yourself, run the following code in Matlab. Your code can further be modified to fit your personal needs. We first initialize our variables of interest, including the input, desired output, bias, learning coefficient and weights.

input = [0 0; 0 1; 1 0; 1 1];

numIn = 4;

desired_out = [0;1;1;1];

bias = -1;

coeff = 0.7;

rand('state',sum(100*clock));

weights = -1*2.*rand(3,1);

The input and desired_out are self explanatory, with the bias initialized to a constant. This value can be set to any non-zero number between -1 and 1. The coeff represents the learning rate, which specifies how large of an adjustment is made to the network weights after each iteration. If the coefficient approaches 1, the weight adjustments are modified more conservatively. Finally, the weights are randomly assigned.

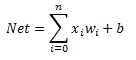

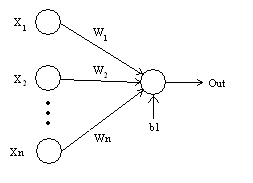

A perceptron is defined by the equation:

Therefore, in our example, we have w1*x1+w2*x2+b = out

We will assume that weights(1,1) is for the bias and weights(2:3,1) are for X1 and X2, respectively.

One more variable we will set is the iterations, specifying how many times to train or go through and modify the weights.

iterations = 10;

Now the feed forward perceptron code.

for i = 1:iterations

out = zeros(4,1);

for j = 1:numIn

y = bias*weights(1,1)+...

input(j,1)*weights(2,1)+input(j,2)*weights(3,1);

out(j) = 1/(1+exp(-y));

delta = desired_out(j)-out(j);

weights(1,1) = weights(1,1)+coeff*bias*delta;

weights(2,1) = weights(2,1)+coeff*input(j,1)*delta;

weights(3,1) = weights(3,1)+coeff*input(j,2)*delta;

end

end

A little explanation of the code. First, the equation solving for ‘out’ is determined as mentioned above, and then run through a sigmoid function to ensure values are squashed within a [0 1] limit. Weights are then modified iteratively based on the delta rule.

When running the perceptron over 10 iterations, the outputs begin to converge, but are still not precisely as expected:

out = 0.3756 0.8596 0.9244 0.9952 weights = 0.6166 3.2359 2.7409

As the iterations approach 1000, the output converges towards the desired output.

out = 0.0043 0.9984 0.9987 1.0000 weights = 5.4423 12.1084 11.8823

As the OR logic condition is linearly separable, a solution will be reached after a finite number of loops. Convergence time can also change based on the initial weights, the learning rate, the transfer function (sigmoid, linear, etc) and the learning rule (in this case the delta rule is used, but other algorithms like the Levenberg-Marquardt also exist). If you are interested try to run the same code for other logical conditions like ‘AND’ or ‘NAND’ to see what you get.

While single layer perceptrons like this can solve simple linearly separable data, they are not suitable for non-separable data, such as the XOR. In order to learn such a data set, you will need to use a multi-layer perceptron.

Thank you for this… It is worth mentioning that and alternative and widespread definition of perceptron is a neuron whose activation function is Heaviside step function (0 for x 0). The sigmoid function proposed here is an approximation off the Heaviside function. The sigmoid is actually better because, unlike the Heaviside function, it is continuous and the neural network is trained more efficiently (faster, does not get stuck)… It’s just some student required to implement the Heaviside function in an assignment should be getting slightly different results.

Just noticed that my definition of the Heaviside function in the brackets got transformed by this platform for some reason, after I had posted. What I meant to say: the function equals 0 for arguments below 0, it equals 0.5 at 0 and it equals 1 for arguments above 0.

how i will plot this on graph?

Can I use this code for ‘AND’ Gate by changing it like

‘desired_out = [0,0,0,1] ‘ ? IS this changing enough?

and another question:

does this OR Gate perceptron use the ‘step’ or ‘sign’ activation function?

because I want to know that we can use hardlims function (sign) in AND Gate?is it possible?

Thank you

Hi everyone,

I am trying to use this code for ‘AND’ by using the

‘desired_out = [0,0,0,1] ‘ but the result are same as for

‘OR’ .

Please tell me how to implement AND !

hi

i would 3 and gate perceptron in matlan ?

can you help me?

I joined twitter about a month ago, and so far I’ve found it enhanses the blogging experiance. It allows me to interact with more sciencebloggers, and keep up with their news more (I prefer twitter to RSS feeds, I never got into Google Reader).I still comment a lot on blogs, probably because I’m just generally a talkative/type-ative person!

los mejores ekipo$ son el milan ,m.unitet ,sevilla ,inter baça y la juve.. el primero deveria d ser el milan luego el sevilla …kakà es el mejor ..miradlo en youtube.XDDD

hi,

I will like to ask

in the code given above does not call

something like this

net=newff([0 2], [25,1], {‘tansig’,’purelin’},’trainlm’).

It also will run as neural network in matlab??

How matlab know it is neural network???

The code does not have net=newff([0 2], [25,1], {‘tansig’,’purelin’},’trainlm’).

because the writer is not using the neural network toolbox in Matlab, but rather writing the ‘newff’ function code out. I hope I answer the little I can

Bande de fainéants! Vous aimez ça avancer lentement pendant 11-12 heures? Vous appelez ça courir? Un marathon en 4 heures? Venez donc faire une VRAI course! L’olympique Esprit à mtl en septembre. Je vous éclate

Will, I have just lately taken up to eat breakfast again but have to say I will stick with what I have said – when I eat breakfast, I eat more the whole day, and I start to put on weight again! So as of tomorrow again – no more brekky!

Cómo he echado de menos visitarte!!! y me encuentro con una de las cosas que más me gustan del mundo, los especieros… después de los quinqués y las botellas antiguas de cristal…Un besote enorme,

My partner and I stumbled over here different page and thought I should check things out. I like what I see so now i am following you. Look forward to exploring your web page repeatedly.

fgfdgfg

hi… this is my scilab code which is im using ann_PERCEPTRON function in scilab.

// Define at the SCILAB command window, the training inputs and targets

p = [57.80; 65.71; 47.91; 23.22; 43.85; 27.22; 23.36; 30.31; 58.78; 29.25; 42.29; 27.31; 56.12; 51.13; 57.36; 46.45; 25.76; 50.89; 30.69; 29.34; 72.11; 80.68; 28.90; 23.52; 53.28; 29.28; 25.22; 36.53; 25.92; 28.42; 20.70; 16.96; 16.13; 20.69; 20.85; 19.69; 18.37; 19.67; 17.61; 16.35; 18.13; 22.17; 17.19; 17.13; 18.45; 18.29; 17.91; 17.19; 21.47; 19.36];

t = [1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 1; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0; 0];

// Create the perceptron and Train it

[w,b] = ann_PERCEPTRON(p,t);

// To test the performance, simulate the perceptron with p

y = ann_PERCEPTRON_run(p,w,b)

for this code it show me the

weight (w) = 50 x 50 value

bias (b) = 50 value

i have 50 value of input (p) and 50 value of output(t).

what i just need is 50 value of weight (w) and only 1 value of bias (b).

can anybody here’s help me….

(sorry my bad english)

tytryrty

I agree that exercise and ritteepion (and recovery) can improve your body and mind.But we can also push further as a way of working our system. In that case not only may we work the system but we may get better outcomes than by doing things the hardway.Example: I want to make a device that has little lights on it that you can wear. Option A (The Hard Way): I can try and create my own circuitry and learn all about making a PCB and create the device nearly from scratch.Option B (The Enlightened Way): I could adopt a simple existing miniature computer such as Arduino. Then the project gets up and running quickly and we get people trying the device sooner (more focus on the usability and how users will use the device). We eventually may do Option A to cut costs for manufacturing but by using a more rapid prototype mechanism, the product gets to testing faster and we succeed.

Hello…

Can anybody tell me the code for perceptron model for sum of two numbers………

Why do you update weights always? They should be updated if input is not correctly classified.

I have a question about the first step that verify the parameter.

In that,

rand(‘state’,sum(100*clock));

weights = -1*2.*rand(3,1); are given

but I dont understand what means of

rand(‘state’,sum(100*clock));

In my opinion, parameter of weights are related with rand(3,1).

Also, rand(3,1) and rand(‘state’,sum(100*clock)) is independent, so I think rand(‘state’,sum(100*clock))

has no meaning in this code.

Is it right?

What means of rand(‘state’,sum(100*clock));.

I dont think rand(‘state’…) line is required either. It has something to do with intialising the random number generator. From what I could gather, the way the generator is initialised differently each time.It helps in making the weights as random as possible.

hi dear

how can i implement a back propagation algorithm in matlab without neural network toolbox

and our input are matrices with variant dimension

more over a curve for error

best wish

hi dear

how can i implement a back propagation algorithm in matlab without neural network toolbox

and our input are matrices with variant dimension

more over a curve for error

best wish

hi dear

how can i implement a back propagation algorithm in matlab without neural network toolbox

and our input are matrices with variant dimension

more over a curve for error

best wish

%is this correct for any number of input and output units?

clc

%define the corresponding input and output values (must have the same

%amount of rows/patterns in each). Number of columns in each array are number of input

%and output units respectively

input = [1 1 1 0 0 0 1; 0 1 0 1 1 0 1; 0 1 1 0 1 1 0; 0 0 1 1 0 1 1;0 0 1 1 1 1 0; 0 1 0 0 1 1 1]

desired_out = [1 0 0 0 0 0;0 1 0 0 0 0;0 0 1 0 0 0;0 0 0 1 0 0;0 0 0 0 1 0;0 0 0 0 0 1];

%define number of units

[numPat numIn] = size(input); %number of patterns (numPat) and number of input units (numIn)

[r numOut]=size(desired_out); %number of output units (numOut)

%~~~~~~~~~~~~~~~~~~~~~~~~~~~~

%set constants

bias = -1;

coeff = 0.7;

%initiallise weights

rand(‘state’,sum(100*clock));

a = -2; %min random weight

b= 2; %max random weight

weights = a + (b-a).*rand(numIn,numOut+1);

iterations = 200;

for i = 1:iterations

out = zeros(numPat,numOut);

for j = 1:numPat %for each pattern

for k = 1:numOut %for each output

%sum all of the inputs (L) tot the output (k) for this pattern(j)

y=bias*weights(k,numOut+1); %put bias in last weight slot. The bias is the innate bias of an output unit (k)

for L = 1:numIn %for each input unit

y = y+input(j,L)*weights(L,k); %cycle through the inputs (L) for the current pattern (j)

end

%get the delta eror for the output unit (the output should

%match the desired output)

out(j,k) = 1/(1+exp(-y));

delta = desired_out(j,k)-out(j,k);

%adjust the weights

for L=1:numIn

weights(L,k)=weights(L,k)+coeff*input(j,L)*delta; %adjust weigh tfrom input (L) to output (k) for pattern (j)

end

weights(k,numOut+1)=weights(k,numOut+1)+coeff*bias*delta; %adjust the bias for this output

end

end

end

weights

out

i think you should swap iterators of numPat and numIn.

in fact, learning process refines weights for each feature of samples.

This is my code

clear

clc

Class = [1 1 2 2];

Agment = 1;

V = [0;0;0];

C = 1;

X1 = [Agment;2;1];

X2 = [Agment;2;5];

X3 = [Agment;5;2];

X4 = [Agment;4;6];

X = [X1 X2 X3 X4];

for I = 1 : size(X,2)

if Class(1, I) == 2

X(:, I) = X(:, I) * (-1);

end

end

K = 1;

for Step = 1 : 100

t=(V’ * X(:, K));

if (V’ * X(:, K)) <= 0

V = V + (C * X(:, K));

end

K = mod(K, size(X,2));

K = K + 1;

end

Hello, i am new on Matlab, where i can find the neural network toolbox so i can import it on mu R2012b Matlab

Thanks!

It’s usuful

now i want to enter only 2 bit input and get the answer what should i do?? imean the input is a matrix and i want the i/ps only 2 bit [0 1] or[1 0] or [0 0] or[1 1]

%here it is, it works well

clear out

test=[1 1];

y = bias*weights(1,1)+…

test(1,1)*weights(2,1)+test(1,2)*weights(3,1);

out = 1/(1+exp(-y))

hi

i am just the beginner using the nn tool in matlab….the first question is when we have to give both inputs and outputs to the nn gui tool in feedforward algorithm then for what we are using it…..i mean if we have output then why do we need to use that tool….please someone help me…i am not able to understand anything…..PLZZZZZZZZZZZZZZZ

neural network is a kind of thing that learn from experience. So by giving inputs and outputs we are train’n it to recognize another input which is similar in pattern we trained. In here, by giving inputs and outputs we are train’n the network.

Hi,

Could you please tell how to implement perceptron when we have an image of size(say 50×50) as input and more than one output(say 5 options)..

Question again why are there 3 weights? and i tried solving the weight output but it doesnt give me the correct answer like

input 1 = 1;

input 2 = 1;

desired output = 1;

the weight given to me by the code after i executed the code is

36.2816

24.0756

24.0756

so,

1×36.2816 = 36.2816

1×24.0756 = 24.0756

Bias = -1

36.2816+24.0756+(-1) = 59.3572

why is the answer not 1?

why is the rule for perceptron not included here?

the three rules where can i find it in this code?

the classify Xk part

https://www.cs.washington.edu/education/courses/415/04wi/slides/Neural/sld009.htm

how to load excel chart 4×18 in percepton with nntool??

tenks

how can i solve a differential equation using neural network scheem in matlab

suppose my equation is dy/dx = 3*sin(x)+e^2x

I’m interested in this.

Do you have any findings or code to share in solving DE?

Pingback: Neural Networks – A perceptron in Matlab – PIYABUTE FUANGKHON

hi all,

I have a question and i really need help coz I’ve tried everything but in vain.

i have a matrix A = [k x 1] where k = 10,000 and ranging, say, from a to b, randomly.

I need to get a matrix B = [m x 1] from A, where m is from a to c ( a<c<b),…

basically, i want to do is to "shrink" A and get a smaller matrix B.

Thanks everybody.

Nietzsche.

From your question, I’m assuming something like the following?:

% Preallocate a random 10000 x 1 matrix A

A = rand(10000,1);

a = min(A)

b = max(A)

% set c = to a random value in between a and b. Lets choose 0.5 for this example

c = 0.5

% Then create B for between a and b

B = A(A<=c) % Can also use the following, though the second part is redundant in this case B = A(A<=c & A>=a)

hi

thanks for your tutorial could you please explain how to solve differential equation in neural networks

Regards

Deepika,

We’ll try to do something on this in the near future. Thanks,

Vipul

should’t the input entered be:

input = [0 0; 1 0; 0 1; 1 1];

Instead of….

input = [0 0; 0 1; 1 0; 1 1];

I’m sure I’m just confused but I need to use the following input data (and am uncertain about how to enter it):

X1=0, 0, 1, 1

X2=0, 1, 0, 1

would it be

input = [0 0;0 1; 1 0; 1 1]

or

input = [0 0;1 0;0 1;1 1]

Your help would be much appreciated

You are correct. In our example here for OR, both [1 0] and [0 1] map to an output of 1 though, so it works still.

If you have a matrix of inputs = [X1 X2] which are defined as follows:

X1=0, 0, 1, 1

X2=0, 1, 0, 1

Then you would use this:

input = [0 0;0 1; 1 0; 1 1]

I feel that is among the such a lot vital info for me. And i am glad studying your aictrle. But should observation on some normal issues, The website taste is wonderful, the aictrles is actually great : D. Just right task, cheers

Hello

I’ve tried this example. I always get same results:

Out

0.5

0.5

0.5

0.5

weights:

0

0

0

I don’t know what is wrong with my code. please help. here is my code

input =[0 0; 0 1; 1 0; 1 1];

numIn = 4;

desired_out = [0;1;1;1];

bias = -1;

coeff = 1;

%rand(‘state’, sum(100*clock));

weights = -1*2.*rand(3,1);

iterations = 10000;

for i =1:iterations

out = zeros(4,1);

for j=1:numIn

y = bias*weights(1,1)+…

input(j,1)*weights(2,1)+input(j,2)*weights(3,1);

out(j) = 1/(1+exp(-y));

delta=desired_out(j)-out(j);

weights(1,1)=weights(1,1)*coeff*bias*delta;

weights(2,1)=weights(2,1)*coeff*input(j,1)*delta;

weights(3,1)=weights(3,1)*coeff*input(j,2)*delta;

end

end

solved

hi, thanks for the good explaination about perceptron.

I hv one question, this program is to train the input right??

then..how i’m going to test the input for classification using perceptron ?

David,

I don’t know if I follow your question. You could plot the results, residuals, MSE errors or other variables over each iteration. If you want to do something like this, that would be possible. If this isn’t what you were looking for, let me know.

Vipul

sorry for my english

how I can plot this perceptron?

Thanks.

Saeed,

An implementation of a multilayer perceptron is now available.

https://matlabgeeks.com/tips-tutorials/neural-networks-a-multilayer-perceptron-in-matlab/

Take care,

Vipul

hi

thank you for having this brief and useful tutorial.

I’d really appreciate if you send me a multilayer perceptron implementation using matlab .

best regards.